At JUMPSEC we foster a research culture and want to provide people with tools and safe environments necessary to conduct research. As part of my ongoing work in setting up a new research lab I also wanted to investigate TOR environments. By design TOR is a privacy first process and the The Onion Router provides a very good solution out of the box. I won’t elaborate on the foundations of TOR in this article, instead I want to use it as an example about various options and pitfalls with routing in docker environments. Let’s look at TOR from a business implementation requirement:

- If devices are domain joined, adding another Intune package requires more maintenance.

- TOR usage in our company should be monitored, for various reasons I am not going to elaborate.

- Hide TOR activity from your ISP (without using obfuscated bridges).

I wanted to create a central TOR service that can be used by any application via an HTTP forward proxy. This can be used by custom scripts or any web browsers. Unfortunately, the latter comes with some caveats, such as human error and security related issues. Though, the interesting issue is technical and it’s about docker networking. Despite ever ongoing conversations around TOR & VPNs, we will route TOR through a paid VPN tunnel (https://www.reddit.com/r/TOR/wiki/index/#wikishouldiuseavpnwithtor.3Ftorovervpn.2Corvpnovertor.3F).

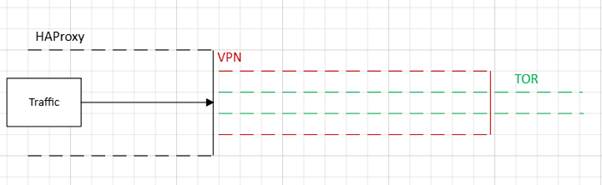

Let’s start building our proxy system based on a docker-compose file that will accept traffic at an HAProxy container and route everything to a TOR container, which itself goes out to the internet via a VPN container.

There are three services:

- vpn – a container to connect to a remote server and use that as an exit node

- tor – the actual TOR connection

- haproxy – our forward proxy for the users

The images we are using are:

- https://hub.docker.com/r/thrnz/docker-wireguard-pia

- https://hub.docker.com/r/dperson/torproxy

- https://hub.docker.com/_/haproxy

For our VPN connection we are using https://www.privateinternetaccess.com/.

Commonly, when configuring a gateway container in docker compose people tend to use network_mode for other containers to share the same network interface and make routing plug and play.

It is possible to do this with our PIA VPN container, however, we are running into a challenge here. If we configured our TOR container to use network_mode: service:vpn the TOR container itself would lose its IP address and can no longer be “seen” by our HAProxy container. Obviously this is a problem, as we cannot configure our backend server in HAProxy without a host.

Let’s start with the simplest container setup, which is our forward proxy.

HAProxy

Lets start by looking at the actual config file for the service. Below are the contents of the haproxy.cfgfile:

global log stdout format raw local0 maxconn 4096 defaults mode http log global option httplog timeout connect 5000 timeout client 50000 timeout server 50000 frontend forward_proxy bind *:3128 mode http default_backend forward_tor backend forward_tor mode http server tor tor:8118

The setup is simple, we create a forward proxy on port 3128 that routes incoming HTTP traffic to the backend forward_tor, which points at the tor container on port 8118. We can easily mount the configuration via volumes and just set ports to ensure that the correct interface is mapped from the outside world.

haproxy: image: haproxy:3.1-alpine volumes: - "./haproxy/haproxy.cfg:/usr/local/etc/haproxy/haproxy.cfg:ro" ports: - "<SPECIFIC_IP>:3128:3128" depends_on: - tor restart: unless-stopped

Before jumping into the networking configuration, let’s look at the basic setup of the other containers.

TOR

The TOR container image we are using utilises Privoxy which automatically forwards traffic to the socks5 TOR proxy. The privacy first HTTP & HTTPS proxy is exposed on port 8118.

tor: image: dperson/torproxy restart: unless-stopped environment: - EXITNODE=0 cap_add: - NET_ADMIN expose: - 8118 depends_on: - vpn

Even though by default the dperson/torproxy is not configured as an exit node, it does no harm to explicitly set the environment variable for it. Of course, we only need to start the tor container if the vpn has started.

What is different to what you see in the examples of the official docker hub page, is that we added the NET_ADMIN capability. This is necessary to make some changes to the networking inside the container. Without it, even as root we cannot change routing behaviour.

VPN

As for the VPN container we can work based on the docker-compose example that is mentioned on docker hub.

vpn:

image: thrnz/docker-wireguard-pia

volumes:

- pia-vpn:/pia

- pia-vpn-shared:/pia-shared

- ./vpn/post-up.sh:/pia/scripts/post-up.sh

cap_add:

- NET_ADMIN

devices:

- /dev/net/tun:/dev/net/tun

env_file:

- .env

environment:

- LOC=swiss

- USER=${PIA_USERNAME}

- PASS=${PIA_PASSWORD}

- LOCAL_NETWORK=${LOCAL_NETWORK}

- PORT_FORWARDING=1

sysctls:

- net.ipv4.conf.all.src_valid_mark=1

- net.ipv6.conf.default.disable_ipv6=1

- net.ipv6.conf.all.disable_ipv6=1

- net.ipv6.conf.lo.disable_ipv6=1

healthcheck:

test: ["CMD", "ping", "-c", "1", "8.8.8.8"]

interval: 240s

timeout: 5s

retries: 3

start_period: 5s

However, you might have spotted, that we are adding a post-up.sh in the volume mounting. This is necessary to add a few more iptables rules to the container. Let’s start talking about the actual networking challenges here.

Networking

Given that we do not want to use network_mode in our containers but need to have IP addresses associated with our containers, we are left with using a custom network that we can attach to each container.

We could just define a network such as

networks: vpn-net: driver: bridge

However, that would lead us to two things:

- The next available network is used, i.e.

172.19.0.0/24or172.20.0.0/24 - The default gateway is configured at

172.XX.0.1automatically - Our containers get a

randomIP address associated by the docker DHCP

This is slightly challenging as we will see in a bit. What we want to achieve is that all network traffic from our vpn-net must go through the vpn container.

However, by default the containers will have the 172.XX.0.1 gateway configured, whereas the vpn container most likely will have the IP address 172.XX.0.2.

First of all, we should know which IP range is used. For that we can look into the ipam configuration of the networks. This is a bit hacky and not in the typical sense of how you should use docker, but it is a necessary evil in this case.

networks: vpn-net: driver: bridge ipam: config: - subnet: 172.21.0.0/24

By setting the ipam we can now set the actual address space of the vpn-net subnet. To verify that this was applied correctly we can the following command on the host system:

# first of all get the networks on our system docker network ls # similiar output NETWORK ID NAME DRIVER SCOPE d2b447ed5154 bridge bridge local d0af048c2f55 host host local fe7503bf5f54 none null local c8bdd0108a0a XXXX bridge local 2d1d57de3b79 XXXX_vpn-net bridge local

and inspect the actual network we are working on:

docker network inspect 2d1d57de3b79

...

"IPAM": {

"Driver": "default",

"Options": null,

"Config": [

{

"Subnet": "172.21.0.0/24"

}

]

},

...

Next, we can specify the actual IP addresses at the vpn container by adding the following:

networks: vpn-net: ipv4_address: 172.21.0.254

This tells the vpn container it has the fixed IP address of 172.21.0.254, which is required, so we can configure our tor containers routes to set it as a gateway. To do that we overwrite the startup command in the tor container with:

command: > sh -c " echo 'Removing default route via 172.21.0.1...'; ip route del default via 172.21.0.1 dev eth0; echo 'Adding default route via 172.21.0.254...'; ip route add default via 172.21.0.254 dev eth0; echo 'Starting torproxy...'; exec /usr/bin/torproxy.sh "

When we run docker compose up you should see the echo statements in the log. Mind you, the ip route del|add commands will only work with the NET_ADMIN capability!

The commands above basically remove the default docker gateway, which we established is on 172.21.0.1 and sets our vpn gateway, which we know is on 172.21.0.254.

Unfortunately, this is not enough to get everything working. Most likely you would time out with requests, as the tor container cannot establish a connection at this stage. So let’s dive into the post-up.sh file for the vpn container. In here we need to set a few more iptables rules.

#!/usr/bin/env bash set -euo pipefail sleep 5 iptables -t nat -A POSTROUTING -s "172.21.0.0/16" -j MASQUERADE iptables -I FORWARD 1 -s 172.21.0.0/16 -o wg0 -j ACCEPT iptables -I FORWARD 1 -d 172.21.0.0/16 -i wg0 -j ACCEPT iptables -I FORWARD 1 -i wg0 -m state --state ESTABLISHED,RELATED -j ACCEPT iptables -I FORWARD 1 -o wg0 -j ACCEPT echo "Done with iptables changes"

BONUS TIP: if you are on Windows and use git to manage all of this run:

git update-index --chmod=+x vpn/post-up.shto ensure that the script is executable. Otherwise, it won’t work!

During my testing, I had a race condition with the execution of the post-up.sh file. The idea of the author was to enable users to adjust iptables rules after the WireGuard connection has been set up. However, the script was often executed before that was done. Due to the kill switch in the container, the iptables rules applied with my script were reverted. Hence, I added a sleep 5 at the top of the script. It’s a quick and dirty solution…but it gets the job done! Lets go over each of these rules:

iptables -t nat -A POSTROUTING -s "172.21.0.0/16" -j MASQUERADE

With this we add to the NAT table of iptables, that all traffic from 172.21.0.0/16 is traversed after the kernel determines where the packet will go out (after routing). MASQUERADE means, that the network IP addresses will look like the vpn container IP address (172.21.0.254).

iptables -I FORWARD 1 -s 172.21.0.0/16 -o wg0 -j ACCEPT iptables -I FORWARD 1 -d 172.21.0.0/16 -i wg0 -j ACCEPT

Effectively these two rules ensure that traffic from 172.21.0.0/16 can go out over the WireGuard connection, and once there is traffic coming back it can be forwarded back to the 172.21.0.0/16 network.

You might think that this is enough, however, there are some issues around the FORWARD rules. As by default they are DROPPED. Let’s add two more rules, the first one:

iptables -I FORWARD 1 -i wg0 -m state --state ESTABLISHED,RELATED -j ACCEPT

This rule allows incoming packets on the wg0 interface that are part of or related to an already established connection. This is essential for ensuring that reply traffic for outbound connections is permitted.

In addition, we need our last rule:

iptables -I FORWARD 1 -o wg0 -j ACCEPT

This rule allows all packets that are leaving through the wg0 interface. It doesn’t perform any state checking, so it applies to both new outbound connections and any other traffic leaving via wg0.

Together, these rules help maintain proper stateful firewall behaviour for a WireGuard interface, ensuring that traffic can flow correctly for both new outbound connections and their corresponding inbound replies.

Before giving you some troubleshooting guides and considerations, let’s put the final docker-compose.yml file together:

services:

vpn:

image: thrnz/docker-wireguard-pia

volumes:

- pia-vpn:/pia

- pia-vpn-shared:/pia-shared

- ./vpn/post-up.sh:/pia/scripts/post-up.sh

cap_add:

- NET_ADMIN

devices:

- /dev/net/tun:/dev/net/tun

env_file:

- .env

environment:

- LOC=swiss

- USER=${PIA_USERNAME}

- PASS=${PIA_PASSWORD}

- LOCAL_NETWORK=${LOCAL_NETWORK}

- PORT_FORWARDING=1

sysctls:

- net.ipv4.conf.all.src_valid_mark=1

- net.ipv6.conf.default.disable_ipv6=1

- net.ipv6.conf.all.disable_ipv6=1

- net.ipv6.conf.lo.disable_ipv6=1

healthcheck:

test: ["CMD", "ping", "-c", "1", "8.8.8.8"]

interval: 240s

timeout: 5s

retries: 3

start_period: 5s

networks:

vpn-net:

ipv4_address: 172.21.0.254

tor:

image: dperson/torproxy

restart: unless-stopped

environment:

- EXITNODE=0

cap_add:

- NET_ADMIN

expose:

- 8118

depends_on:

- vpn

networks:

vpn-net:

ipv4_address: 172.21.0.10

command: >

sh -c "

echo 'Removing default route via 172.21.0.1...';

ip route del default via 172.21.0.1 dev eth0;

echo 'Adding default route via 172.21.0.254...';

ip route add default via 172.21.0.254 dev eth0;

echo 'Starting torproxy...';

exec /usr/bin/torproxy.sh

"

haproxy:

image: haproxy:3.1-alpine

volumes:

- "./haproxy/haproxy.cfg:/usr/local/etc/haproxy/haproxy.cfg:ro"

ports:

- "<HOST_IP>:3128:3128"

depends_on:

- tor

restart: unless-stopped

networks:

vpn-net:

ipv4_address: 172.21.0.11

volumes:

pia-vpn:

pia-vpn-shared:

networks:

vpn-net:

driver: bridge

ipam:

config:

- subnet: 172.21.0.0/24

Troubleshooting and Debugging

Ensure the TOR container is routing traffic through the VPN container:

docker exec -it tor-container ip route # Expect to see the vpn ip address we specified in the docker compose file default via 172.21.0.254 dev eth0 172.21.0.0/16 dev eth0 scope link src 172.21.0.4

Verify that the VPN container has access to the internet via the default docker gateway:

docker exec -it vpn-container ip route # Expect to see the vpn ip address we specified in the docker compose file default via 172.21.0.1 dev eth0 172.21.0.0/16 dev eth0 scope link src 172.21.0.254

Check that the IP address of the vpn container is actually coming from the PIA remote server:

docker exec -it vpn-container curl ifconfig.me/ip # Should be different to your host ip (simply check with the same curl command but not from a docker container) 12.34.56.78

Ensure that your FORWARD rules are loaded correctly:

docker exec -it vpn-container iptables -L FORWARD -n -v # Similar to below: Chain FORWARD (policy DROP) pkts bytes target prot opt in out source destination 42 3333 ACCEPT all -- * wg0 172.21.0.0/16 0.0.0.0/0 12 900 ACCEPT all -- wg0 * 0.0.0.0/0 172.21.0.0/16

Go into the vpn container and install tcpdump to observe traffic:

docker exec -it vpn-container bash $ apk add tcpdump ... # observe wireguard interface tcpdump -ni wg0 # check for traffic from a specific host tcpdump -ni eth0 host 172.21.0.XX

While running

tcpdumpon thevpncontainer, run simplecurlcommands from thetorcontainer. If you seeip: RTNETLINK answers: Operation not permittedyou forgot to set theNET_ADMINcapabilities. You would see that with anyip route add|delcommands.

If you are using different TOR images, you must check if there is a transparent proxy running:

It might be the case, that all network traffic is forced to go out via TOR and not actually forwarded to your default gateway. How is this relevant? If you run the curl ifconfig.me/ip commands, they might get out via TOR, instead of the default gateway. In our case, we would see the normal gateway route and therefore get the same IP address as the vpn container would show with the same command.

Inspect your TOR container scripts:

In the Dockerfile GithubLink we can see that there are plenty of privoxy rules around forwarding docker networks:

sed -i '/^forward 172\.16\.\*\.\*\//a forward 172.17.*.*/ .' $file && \ sed -i '/^forward 172\.17\.\*\.\*\//a forward 172.18.*.*/ .' $file && \ sed -i '/^forward 172\.18\.\*\.\*\//a forward 172.19.*.*/ .' $file && \ sed -i '/^forward 172\.19\.\*\.\*\//a forward 172.20.*.*/ .' $file && \ sed -i '/^forward 172\.20\.\*\.\*\//a forward 172.21.*.*/ .' $file && \ ... sed -i '/^forward 172\.29\.\*\.\*\//a forward 172.30.*.*/ .' $file && \ sed -i '/^forward 172\.30\.\*\.\*\//a forward 172.31.*.*/ .' $file && \

Final Thoughts and Notes

This project is certainly not meant to “just use as-is”. Whether this approach is safe for privacy concerned users is another topic. The TOR project itself gives good guidelines around bridges and safety. TAILS and the normal TOR browser will protect users in a much better way than using a “normal” web browser. This article is not about protection.

Instead, I hope you learned about networking in docker and how to troubleshoot within docker containers. Even though some of the things we did are not in direct line with the docker philosophy (setting IP addresses on containers, IPAM settings), we can see that more complex tasks like these are straightforward to implement.